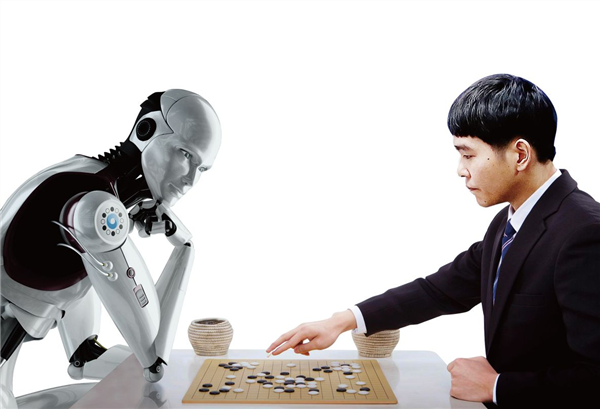

In March 2016, Google's DeepMind developed AlphaGo 4:1 to defeat the nine-part Li Shishi, which caused a worldwide sensation. At the end of 2016, AlphaGo returned as the Master and played a quick match against dozens of Chinese, Japanese and Korean masters. This time, Ke Jie has nothing to say.

Then, Libratus, developed by Carnegie Mellon University, defeated four of the world's top pros in Texas Hold'em. Unlike Go, Texas Hold'em is an asymmetric information game where players have to guess the opponent's hand and guess the other's mind. Dong Kim, who lost the least money, said after the game that he didn't think that the AI ​​was so powerful before the game. He seemed to be able to see his hand as if he were hanging.

Although top Internet companies like Google, Facebook, Microsoft, and Apple are all arranging AI and deep learning, there is no large-scale application of AI in real life. The AI ​​we touch every day is just a boring time to tease Siri.

The reason why AI has not been popularized in daily life is largely because most Internet companies are leaving the industry to develop underlying algorithms.

In other words, scientists who understand AI and deep learning technology don't understand the actual problems in the industry, and companies in the industry do not dare to touch such technologies as AI. Therefore, we will continue to discuss with you the transformation of AI in various industries in the future. Today, we share the opportunity of education + AI with Hu Tianshuo, senior investment manager of Xinghe Internet, and hope to help you.

The following is for your reference.

What is the first depth learning?

AlphaGo, Libratus and what are the commonalities of various autopilot technologies? That is to use the latest Deep Learning algorithm. Deep learning involves deeper mathematics, and considering that readers may not have a deep background in science and engineering, I will introduce deep learning in the most accessible way.

Traditional Machine Learning is generally used to process numbers with simple laws. For example, it is known that global warming in these years, predicting future trends, or songs that users who like Jay Chou will like. For many complex data, it is difficult to answer with a simple mathematical formula. For example, if we find a mathematical formula for the cat, it must be very complicated, because there may be black cats, white cats, cats, sitting, lying and catching mice - but deep learning can be done To the identification and analysis of complex objects such as images, sounds, and text.

Deep learning of this technology, we will remember the following points.

1. Deep learning can relate two different complex data

As long as there are enough pictures to mark the corresponding text, deep learning can predict the text corresponding to similar pictures.

By replacing the picture with sound, deep learning changes from picture recognition to voice recognition. If the text corresponding to the sound is marked, speech recognition can be performed, and if the person who is speaking is marked, voiceprint recognition can be performed. By reversing the corresponding process, you can do speech synthesis.

Not only can the image correspond to the text, but the image can also correspond to the image itself. For example, the sketch image can be associated with the real photo, or the real photo can be associated with the art photo.

Text can also correspond to text. Chinese corresponds to English and can be translated. News headlines can be generated automatically by matching long news reports with their summaries. By speaking to your potential response, you can do a chat bot. There are many cases in which the combination is arranged. For example, the basic principle of the automatic driving technique is the correspondence between the picture and the steering wheel/throttle/brake. Of course, in practice, it is not a simple one. It needs to use different types of deep learning networks such as CNN/RNN/LSTM/GAN, etc., and it will not be expanded here. If you are interested in learning, you can go online and search. .

2. Deep learning requires a lot of data compared to human learning.

At present, a major bottleneck in deep learning is that if you want high accuracy, you have to have a lot of supervised learning. As an example, a person who has never seen a kangaroo can only see if a photo of the next kangaroo is a kangaroo. In imagenet, every type of annotation (such as kangaroos, trucks) needs to appear thousands of times before the computer can learn. Similarly, DeepMind trains the computer to play games, and it is only after a few thousand sets of computers that the computer can learn, and the average person has already started a dozen or so. Even with Alphago, Libratus can get the top human masters, but the number of them and their own is far more than the number of chessboards in the middle of the top master.

From this perspective, deep learning is more "stupid" than people. In the case of the same amount of data, the summarizing ability of deep learning algorithms is weaker - the future algorithm (so-called One-shot Learning) should There will be a breakthrough in this direction.

3. Not only deep learning tools are open source, but most deep learning algorithms and even models are open source.

The field of deep learning has developed rapidly in recent years. Most research practitioners have abandoned the cycle of traditional papers and papers for several months. Instead, they will upload their papers to the foreign Arxiv website for the first time. This has greatly accelerated the speed of traditional scientific research, often just emerging new results, in less than a few weeks, there are updated results beyond the original algorithm. Not only that, a lot of code is open source on Github (all previous examples can be found on Github open source projects), so when enterprises use AI, most of them do not need to do basic research on algorithms, but only need To research and use the latest international research structure. Enterprises can completely use the underlying deep learning tools as a black box. The only real work is to create enough manual annotation data and simple processing and encapsulation of the original results of deep learning.

4. Deep learning is still far away from true universal AI

Deep learning is now like a 3-4 year old child, able to connect the car's picture, the word car, and the car's voice. Essentially it can match complex data, but there are many that can't be done, for example:

Make meaningful conversations with people

Write a logical article

Make moral judgments in the event of a car accident

Writing a computer program

But this does not prevent us from using deep learning in the industry at this stage.

How does the second AI combine with education?

In the previous section we explained what potential AI based deep learning is. In this part, we look at the combination of deep learning and the education industry from several different perspectives of image, sound, text and adaptive learning.

2.1 Image articles

At present, the most mainstream application of image recognition is photo search, and the typical representatives are homework, Xuebajun, Xiaoyan search questions, Afan questions...

The traditional K12 application is more about staying in the students' need to take the initiative to watch videos and do the questions, which itself is difficult for students to have learning scenarios. Photo search questions are students with problems and confusion to learn, so we also see the photo search app is the most active in all K12 applications. The core technology of photo search is to match the picture with the text, and then to match the text with the existing question bank. In the above, we also mentioned that due to the popularity of open source technology, image recognition no longer has the previous threshold. The biggest threshold now is the size of the brand and the question bank.

Of course, the photo search itself is very controversial. If the student is getting the answer through the mobile phone after encountering difficulties and thinking, this is a good self-learning process. But if you copy all the answers to the workbook without any brain, there is no learning significance. What is the result of the non-controversy? One thing is worthy of recognition. That is, the photo search has broken the monopoly that only the teacher has the standard answer. When the teacher arranges the homework, the thinking is that when all the students have the correct answer. How to let students practice seriously.

In addition to the recognition of the title, the accuracy of handwriting recognition is gradually increasing. Some specialized companies will also identify the formulas and scientific symbols, and this will not be carried out.

Three points that can be foreseen in the future for image recognition and education:

2.1.1 Open up paper books and online education

For thousands of years, traditional paper books have carried almost all human knowledge. However, the graphic knowledge on these papers is in sharp contrast with the interactive online education. Although AR books are now based on AR as a gimmick. However, image recognition and augmented reality have the potential to bring new paper books to the past, so that they can become a new channel of learning communication between authors and readers, readers and readers before they disappear completely. With enough powerful image recognition technology, the book does not need to embed a QR code, and the content of the book itself is a "two-dimensional code." "Ugly Duckling" has become a 3D game. The "New Concept" can directly judge the level of reading aloud. The classic passage of "Dream of Red Mansions" has been reviewed by tens of thousands of readers. The "Three-body" can directly communicate with Da Liu.

2.1.2 Open up the real world and online education

Microsoft previously launched an App "Microsoft Knowledge", although the interactive experience and recognition rate are not ideal, but it represents a new way of learning, what you see is learned, or Just-in-time learning. In the future, there will be underlying technology providers that recognize objects in the picture with higher accuracy and lower latency—learning language and science, no longer around “words†or “conceptsâ€, but more around the user. The real "scene".

2.1.3 Motion Capture and Online Education

The fundamental reason why a large number of sports, musical instruments, martial arts, dance, and painting are difficult to do online education is because the lack of educators gives timely and effective feedback to the learner's posture. With the maturity of visual motion capture technology, the cost is gradually decreasing (and certainly not based on wearable device solutions), each learner can enjoy one-on-one personalized guidance from top AI coaches.

For educational institutions, motion capture, especially face capture, is a new way to protect your IP. The institution loves and hates the famous teachers. The famous teachers can bring a lot of high-quality students, and they can take the high-quality students at any time. However, if the student is not in front of a real face, but a virtual IP image such as "Hatsune Miku", then the final student agrees with this image rather than the specific teacher.

2.2 Sounds

After the picture is finished, let's talk about the combination of sound and ai. At present, the most popular application of voice is voice evaluation, that is, students say a word, the machine gives a score, the typical representative is Xunfei, fluent, work together, box fish...

The main opportunity is that both students and parents, as well as schools and the Board of Education, are gradually paying attention to the English spoken language. Although the college entrance examination is "weakening English" on the surface, in fact, if you want to go to a prestigious school, the proportion of English, especially spoken English, is greater than in the past. At present, the level of oral evaluation of most spoken oral learning apps in the market is to judge the pronunciation accuracy of a prescribed sentence, or a semi-open communication. The real difficulty is to evaluate the quality of an open dialogue - in fact, this part is more of the text recognition to be discussed in the next section. In this direction, Xunfei has signed contracts with many provinces in the country to try to make automatic corrections to the open oral questions of the college entrance examination, which is also directly related to the NLP to be introduced next.

One of the biggest future applications of sound is to make speech synthesis that is indistinguishable from the human ear. At present, Google's Wavenet is close to this level, but it is very slow to calculate. Baidu has recently optimized the performance of this result. The other is to raise the speech recognition rate to a new level. Both of these tasks will be realized and popularized within the foreseeable two or three years. By the time, with the virtual IP image, many front-line teachers will gradually realize that they may shift from “before the stage†to “behind the scenes†and even face the risk of unemployment.

In addition, voice recognition will have some applications in the field of music teaching. This piece will not be developed.

2.3 Text (NLP)

Word processing, also known as natural language processing (NLP), is the biggest application of traditional education. It is the automatic correction of composition. Because the market is small, it will not be expanded here.

Let's think about one thing. 99% of human knowledge is recorded in the context of words.

The most important thing for the teacher to lecture is not his face and his tone, but what he said. By the same token, the most important combination of true online education and AI is neither sound nor image, but text. In our previous discussion, as long as the text is well defined, we can synthesize the most magnetic sound with the most handsome virtual face. All online education does not require “fixed videoâ€. If the content of the lecture, that is, the text is changed, it is equivalent to each student having a targeted one-on-one teacher.

The big problem that AI has to solve for online education is how to turn the knowledge of death in the textbook into a dialogue between the teacher and the student. The technical challenges here are very numerous, including:

1. Automatic problem-solving ability - can automatically generate detailed analysis according to the topic.

2. Job intelligence correction ability - here is not just to correct the choice of judgment questions, but to be able to correct the student's problem-solving process.

3. Intelligent Q&A ability - able to answer questions related to the subject.

4. Adaptive dialogue ability - perceive the student's learning state and constantly give corresponding interaction. (please refer to the following)

All of the current solutions to these four problems are based on manually written rules, not on AI. However, the development of science and technology in this area is also very fast. Geosolver has tried to solve the first problem in foreign countries. There are also teams in China that are trying to do "the college entrance examination robot."

2.4 Adaptive articles

At present, the adaptive products on the market are based on the manual combing teaching model + simple mathematical modeling - the product form is also relatively simple, according to the students' right or wrong, to assess the mastery of their knowledge points. Typical examples are Knewton, Khan Academy, and the FAQ library, and the IRT algorithms they use are already open source. In practice, the real time-consuming and labor-intensive is teaching and research, and this is still doing very rough. After all, most adaptive learning products only care about the right or wrong of a question, but can't judge the specific "why" is wrong - the same fill-in-the-blank question, different students answer the teacher can see at a glance which knowledge point can not master And the system can only be attributed to a fixed knowledge point. As for the proof questions, the answer questions are not solved by the existing adaptive products.

So the true adaptive dependency condition is still the NLP/word processing capabilities mentioned earlier. Only when the algorithm can see the title knows the correct answer, see the topic knows the meaning of the questioner, and when you see the wrong answer, you know which knowledge point is not home, online education will have a new shuffle, adaptive Become the mainstream way of learning.

Summary: With the development and popularization of artificial intelligence, traditional learning methods will be completely subverted. Each student will have a one-on-one professional AI teacher who can learn all kinds of knowledge, no longer from family background, school and textbooks. limits. The combination of speech synthesis, virtual IP and nlp and adaptive learning has created a huge business prospect. Of course, this vision is indeed far away from us. Before this vision is realized, we still have to tighten our belts to buy high school districts. However, deep learning is now providing tools for the education industry. Some companies will get ahead of the game, and some companies will falter. The ultimate winner is neither the best-selling company nor the one with the best AI R&D capabilities, but those that combine technology and teaching with products and promotion. This is also the company we are most looking forward to investing in. I will talk about it today, and we will see you next week.

White Nightstand,Mirrored Nightstand,Floating Nightstand,Black Nightstand

BOSA FURNITURE CO.,LTD. , https://www.bosafurniture.com